((As requested by Richard, posting my question also here.))

I just recently started

trying out Coderunner for a Moodle quiz and run into a problem that I

don't know how to solve or even if it is possible to do what I want with

the CodeRunner as it is now.

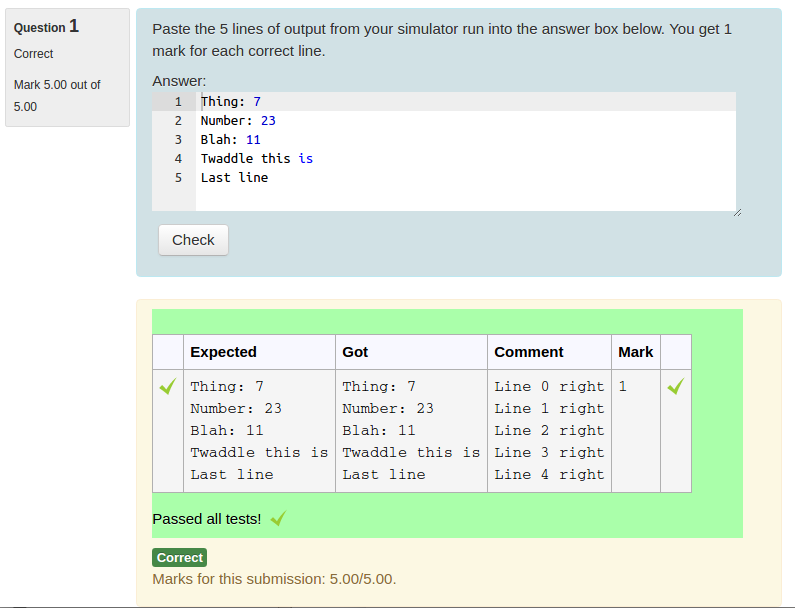

So, I have (mostly) built a custom template as our students will not

actually do code, they will return their simulation netlist (text) which

will then be compared to the given netlist. The template is written

with Python3 and student answers are then split

into lines (string) and then checked against the given testcode (=actual

netlist).

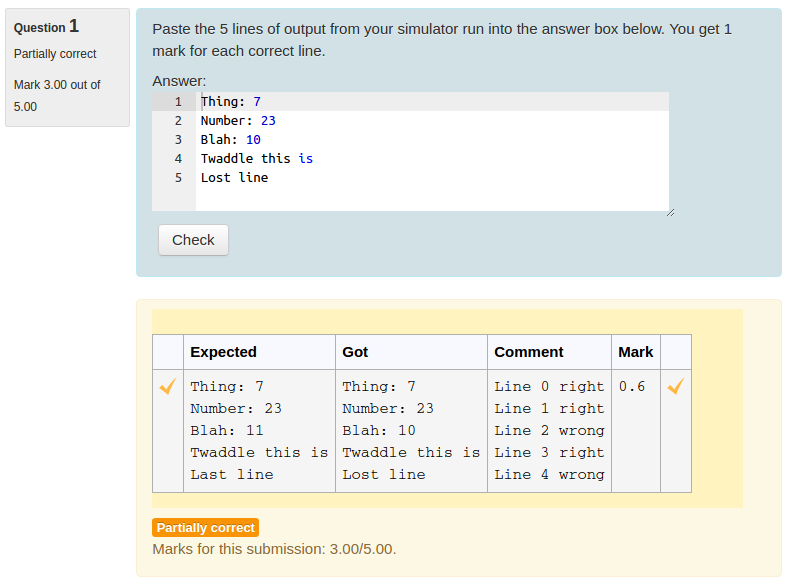

Now, the problem comes when you need to give somekind of feedback, as

there are a number of ways you can do stuff in the simulator that I

would like to give full marks, but also give a feedback e.g. "Next time,

use this [simulation command] instead of [simulation

command used]" or something similar. But if I just print this info while

doing the tests, the exact match grader will (of course) give 0 points,

as it is not excatly matched as the expected result is.

I was thinking of going over this problem by adding a "results column"

item called feedback, but I don't know how to get the template to give

this info which is gathered in a feedback list while running the test

for student code.

I also thought about just updating one the existing fields (like 'extra'

for example, as it won't be used for the code otherwise), but I can't

seem to find info on how to do that either. I tried modifying the info

on "an advanced grading-template example",

but I didn't manage to make that work.

Any help would be greatly appreciated.

Best regards,

Heli