I'm not quite sure what it would mean to "add TeX as a programming language". The usual model in CodeRunner is for student code to be executed (on the Jobe server) and the output to be checked to see if it matches the expected output. The output is usually text and a simple text-equality check is performed. For LaTeX the output is some form of graphic, depending on the output filter. Checking the correctness of a graphic or an image is going to be problematic.

One approach might be to render the student code to pdf using pdflatex and compare that with the output from your expected answer rendered in the same way. You might have to filter the pdf in some way to remove content that varies from run to run, e.g. date stamp, source file name, etc. I think that could be made to work but it would be hard to generate meaningful error messages. If the answer were correct, you could display OK but if it were wrong you could perhaps use MathJax to display both your code and whatever MathJax did with their (wrong) answer. But that wouldn't always be helpful.

Your could explore such options yourself without any changes to CodeRunner, although you'd have to add the required LateX tools to the Jobe server. See https://github.com/trampgeek/moodle-qtype_coderunner/blob/master/Readme.md#supporting-or-implementing-new-languages

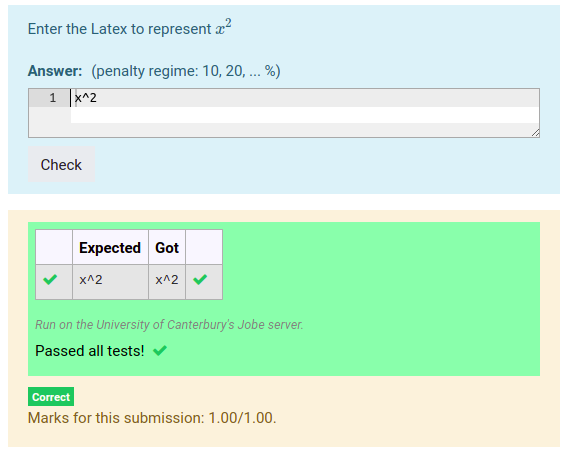

However, CodeRunner can be used to write any question to which the answer is text that the question author can validate with a computer program. So if, as a trivial example, you wanted the student to write LaTex for represent x2 you could use a Python3 question type to check if the student submitted the expected answer of x^2. You would probably want turn off the Ace editor or set the Ace language to LaTeX (which doesn't seem to do anything but does at least suppress extraneous syntax colouring).

Here's that trivial example, using as a python3 template the code

__student_answer__ = """{{ STUDENT_ANSWER | e('py') }}"""

print(__student_answer__)

This is obviously ridiculously generous on the penalty regime, so for a more meaningful question you'd either set the penalty regime to 100% or you would refine the checker to print either "OK" or "Wrong answer" (with the Expected output being OK).