Thanks Tim. I agree it would have been good to have a walkthrough unit test to use, but all my walkthroughs are testing a single question, not a whole quiz. I did try to modify one of the mod/quiz walkthroughs to use with CodeRunner but couldn't figure out how and gave up after an hour or so. Time is a bit too precious, sorry.

But I believe I understand exactly what is happening, although not necessarily what to do about it.

When I originally wrote the behaviour_adapted_for_coderunner class I mostly copied code from the adaptive class without, I confess, understanding quite a lot of it. After poring over it again I'm afraid I still don't understand the logic around invalid submissions.

The line

$status = $this->process_save($pendingstep);

returns question_attempt::DISCARD if the question attempt state is FINISHED or if the submission response is the same as in the previous recorded step.

The behaviour then checks for an incomplete (invalid) submission, which is kept if it follows a valid one or otherwise is kept or discarded according to the return value from process_save. This is the bit I don't understand.

If instead the response is complete, another check with is_same_response is done, but now with the previously saved try step rather than simply the previous step. If the responses are the same, the new step is discarded.

However, with CodeRunner things are even more complex. The problem is that is_same_response does not get given the information it needs to distinguish prechecks from checks. When I call it from within my behaviour I include a check on whether it's a precheck or a check so that two checks in a row, or two prchecks, are not considered to be the same response. [Edit: that should be only two checks in a row, or two prechecks, are considered to be the same response.] This all works fine normally. BUT, the extra logic I included to deal with invalid submissions (the sandbox is down, say) follows the above logic from the adaptive behaviour, which uses the (invalid) return value from process_save. That means that if there are two invalid submissions in a row with the same response, the second is discarded, even if it was a Check following a Precheck [Edit: or vice-versa]. BUG!

My apparently working fix (see the

screen shots below) is to ignore the return value from process_save, and discard a submission only if there are two successive

try submissions containing the same response and both are either checks or prechecks. If this is a sufficient condition for discarding, then I can simplify my logic quite a lot. But I'm not sure I understand the logic around discarding well enough. Are there other situations I need to handle?

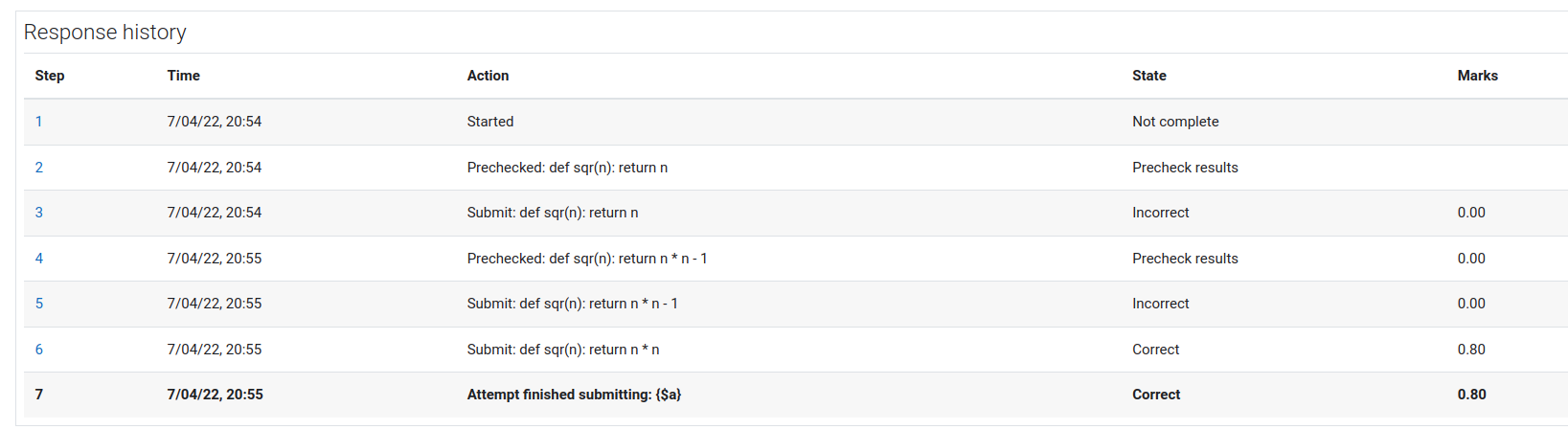

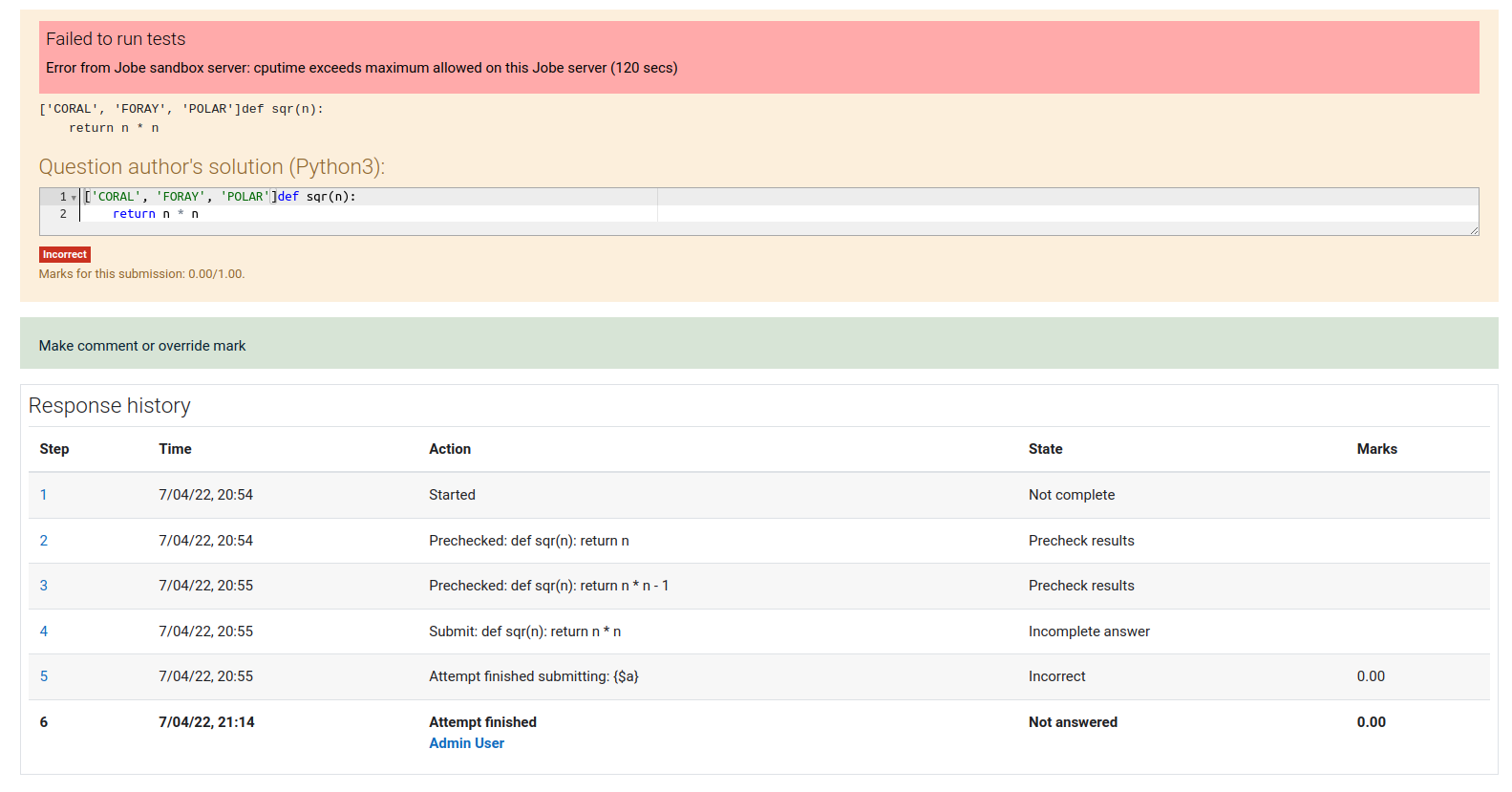

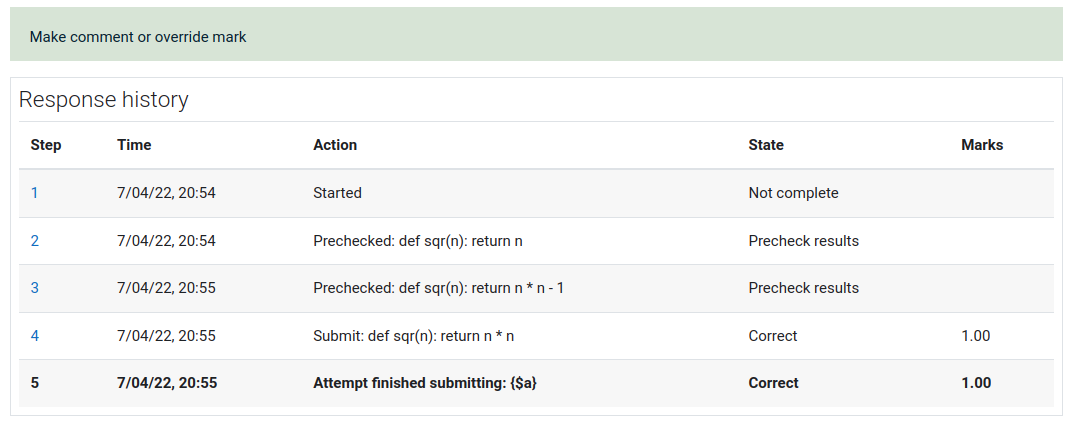

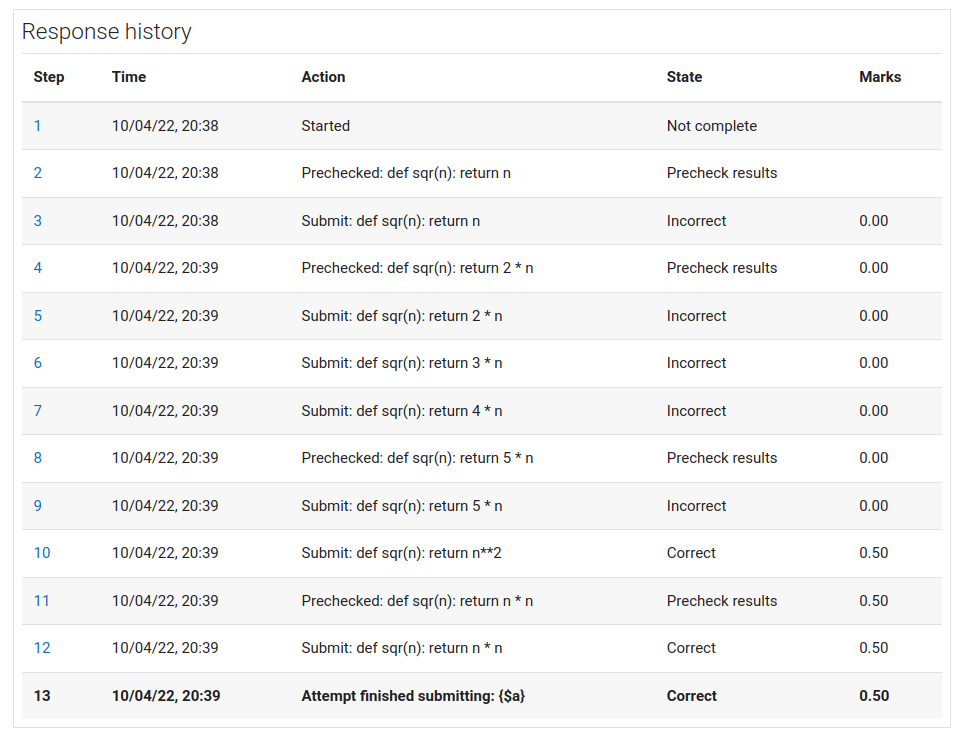

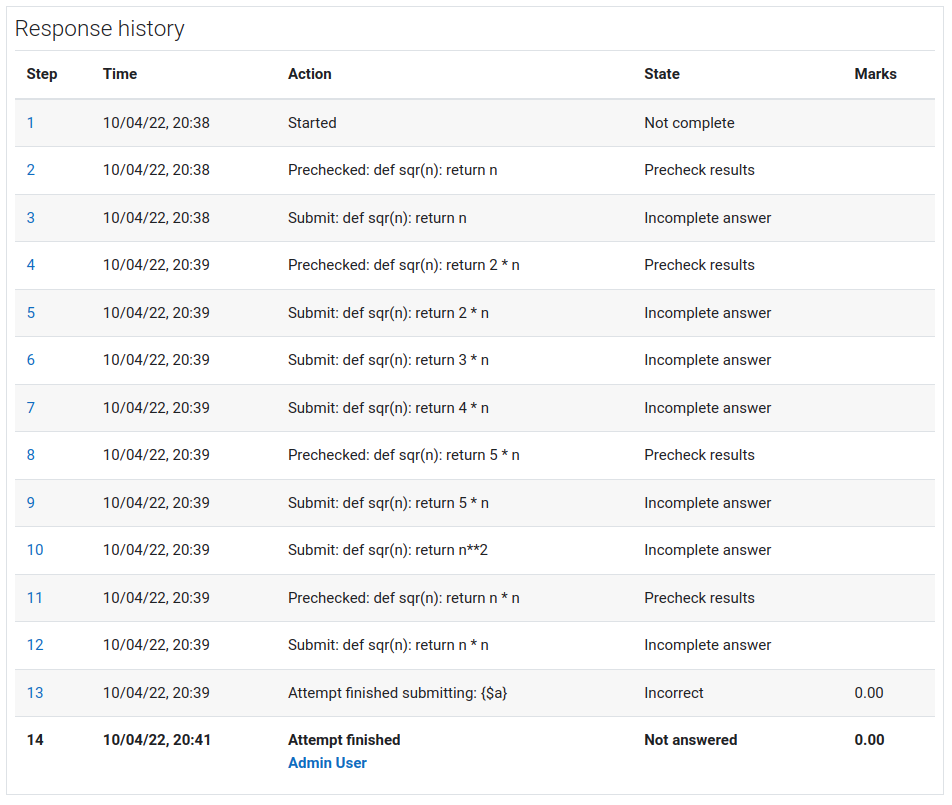

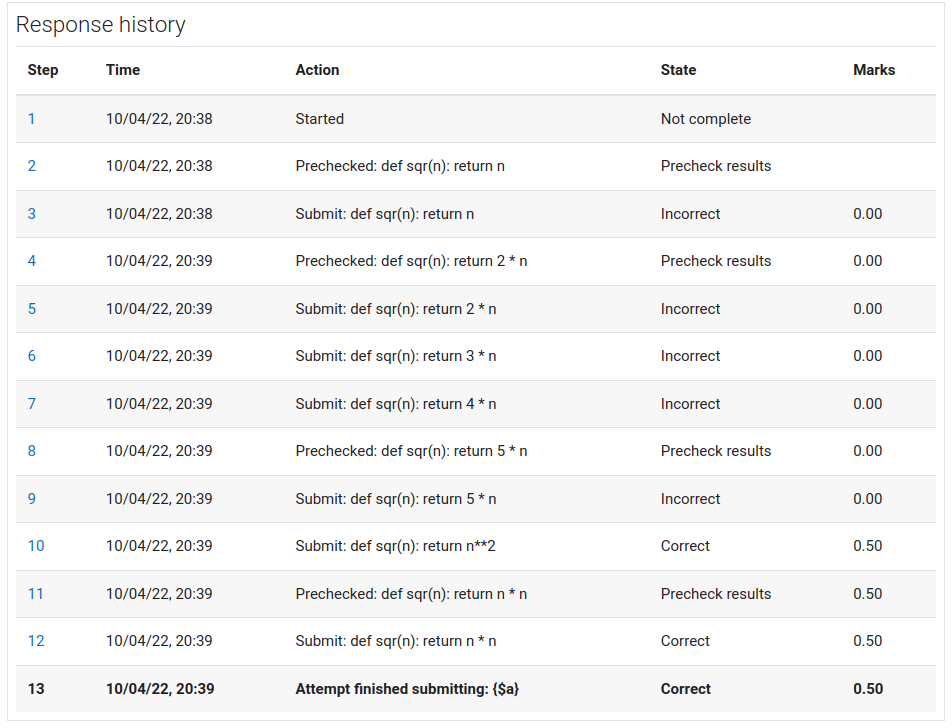

Here are the

screen shots of the response history following a complex series of prechecks and checks with a valid question, then the response history after breaking the question (setting the time limit to an out-of-bounds value, resulting in sandbox errors) and regrading, then the history after fixing the question and regrading yet again. The third one is the same as the first, so all is well again.

Before breaking the question

After breaking the question and regrading

After fixing the question and regrading again