Hi Richard and others,

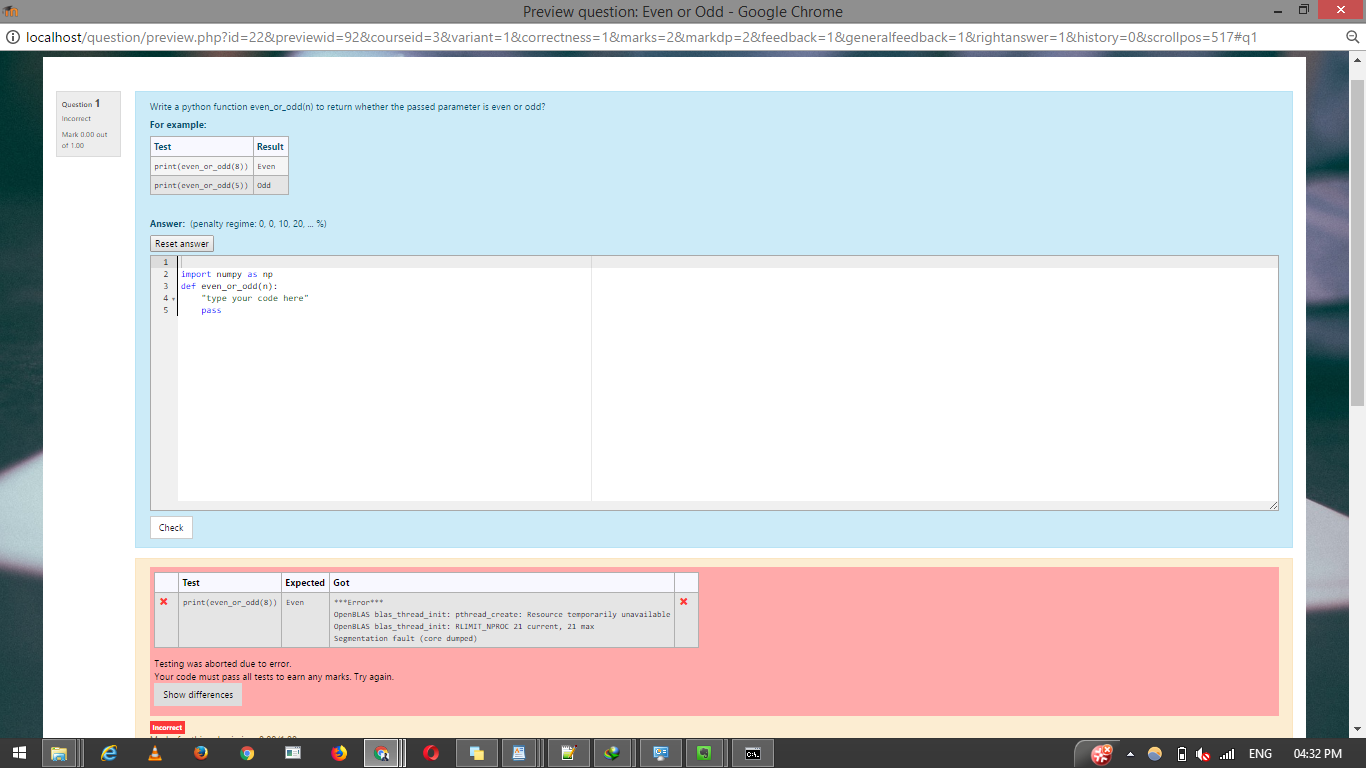

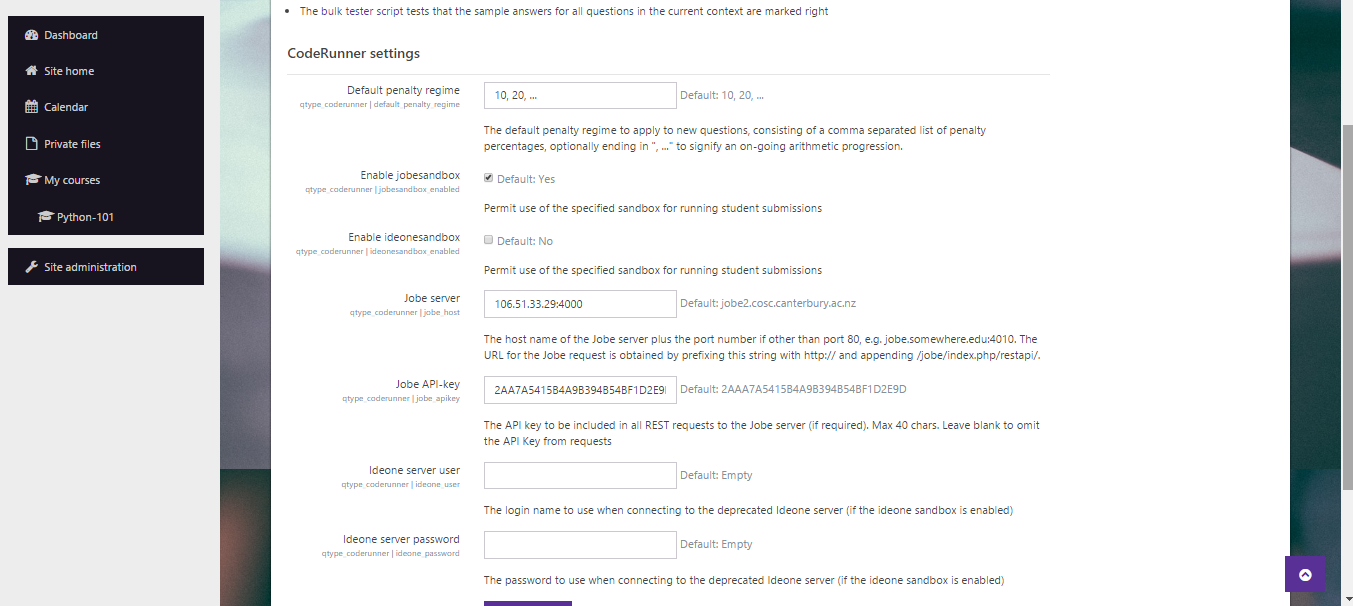

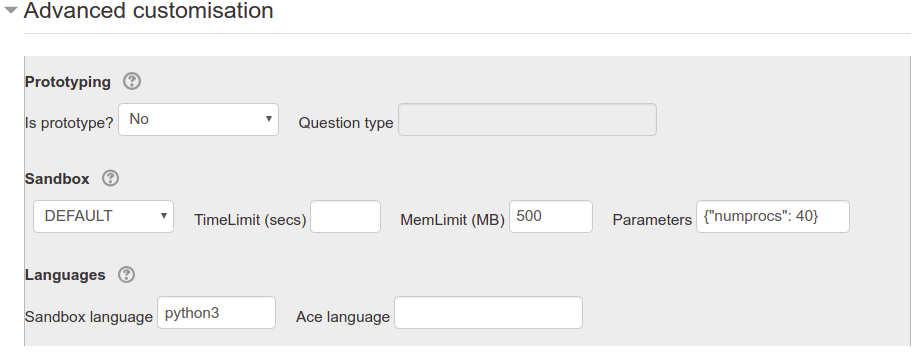

I have been having the same problem as given in this thread for the past few days. This is my second Coderunner setup: the first one did not have this issue. Maybe the version of the operating system (ubuntu 18 i think) has something to do with it as well.

Anyway, the solution proposed by Richard above did not work for me. After some googling, I found a hack:

https://stackoverflow.com/questions/52026652/openblas-blas-thread-init-pthread-create-resource-temporarily-unavailableBasically, we need to add the following in the code:

import os

os.environ['OPENBLAS_NUM_THREADS'] = '1'

before

import numpy as np

I added this both in the model solution on Coderunner, and the student submission as well. This hack seems to work, and I dont get the segmentation fault anymore.

It probably has to do something with the operating environment where Coderunner is installed. Hope this helps!

Regards

Padmanabhan

IIT Mandi, India