Hi Michael

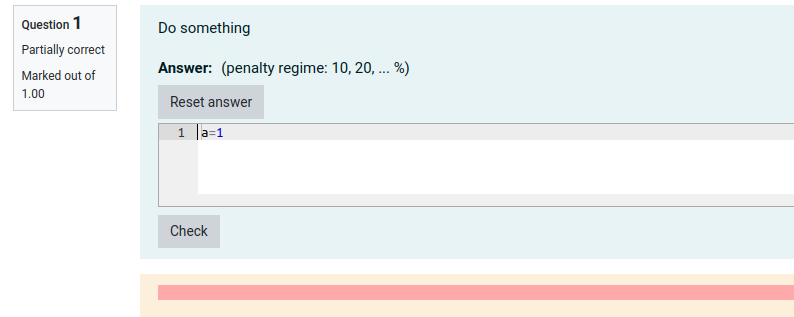

Re point 1: Moodle actually does not seem to shows the marks for a question in preview mode (even regular coderunner questions but you tend not to notice because you get the nice table). If the question were in an actual quiz then you would see the mark.

However, what you probably want to do here is output the mark in a very clear way to the student. Fortunately with the template combinator grader you get to control the exact out put the student will see! Say you have a way of marking student code

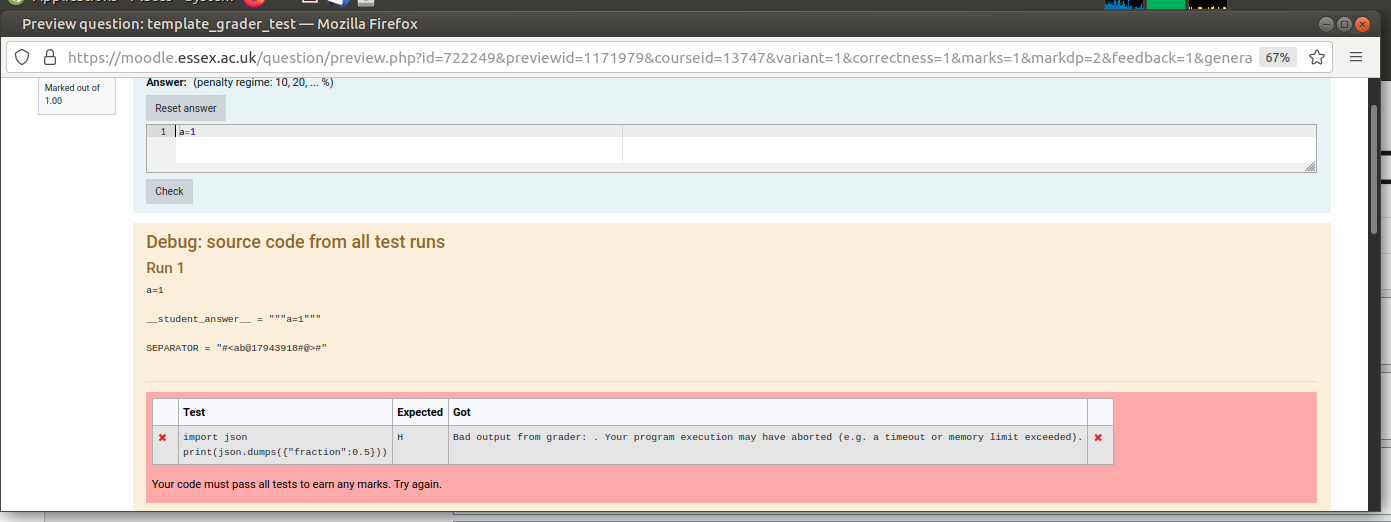

that produces a mark out of 100. Then you might like to try something like the following.

import json

# Have some code to compute the students mark.

student_mark = 78

output = {

'fraction': student_mark/100,

'prologuehtml': f"<h2> Your code received {student_mark}/100 marks</h2>"

}

print(json.dumps(output))

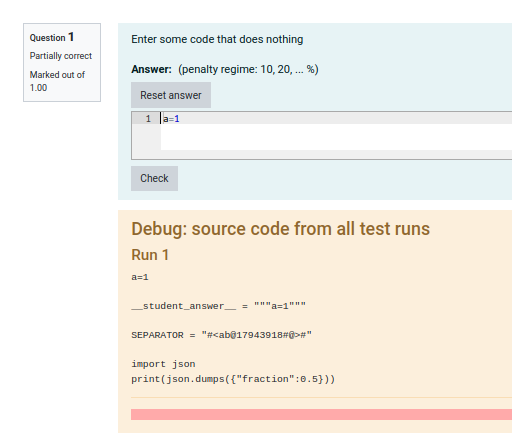

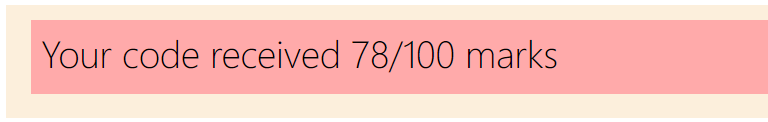

This would output something like the following

and with the fraction set to 0.78 they would get 78% of the marks assigned to that question in the quiz.

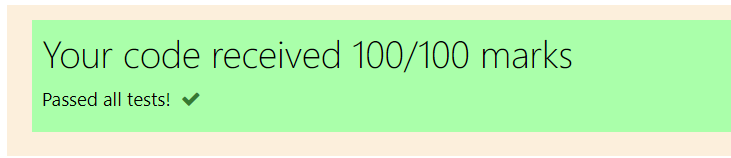

Unfortunately we can't doing anything about the red banner. As far as I am aware, coderunner will always display a red background if the marks for the 'fraction' of the question is less than 1. Otherwise it will be green (see below). This is something I would liked to see fixed because in cases such as this a mark of 78/100 might be quite good and it seems a bit harsh to highlight it in red.

Ultimately you can include any html you like in the 'prologuehtml' field to format the display in any way you like. From the simple output I have shown above to very complicated output with more feedback or a breakdown of the marks a student is receiving.

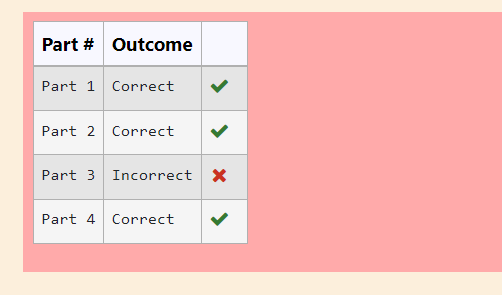

You can also include a customised version of the table coderunner would normally output using the 'testresults' field. For example in one of my questions that consists of multiple parts I use the template grader to output a table that looks as follows.

In this case the 'testresults' field is being set to a 2d list representing the table that would look as follows (note the iscorrect column header is a special header recognised by code runner to display either ticks or crosses in that column).

[

["Part #", "Outcome", "iscorrect"],

["Part 1", "Correct", True],

["Part 2", "Correct", True],

["Part 3", "Incorrect", False],

["Part 4", "Correct", True],

]

You could put what ever you liked in this table. If you have set the 'prologuehtml' field (as I did in my earlier example) then this would come before the table. If you wanted to have some html/text after the table you could use the 'epiloguehtml' field.

Hope this is helpful

Cheers,

Matthew