Hello all,

We have been running CodeRunner at Aalto University since 2016 and it seems that we discover new ways to use it every year (thank you so much Richard and everybody else!). We are for example using it to check students' Excel spreadsheets on our chemistry and materials science courses.

As the number of CodeRunner courses and students is increasing steadily, we have tried to increase the scalability of Jobe by moving from one single Jobe server to a cloud-based JobeInABox + Kubernetes setup. Things are mostly working really well, but we have some issues related to Python3 exercises with support or attachment files.

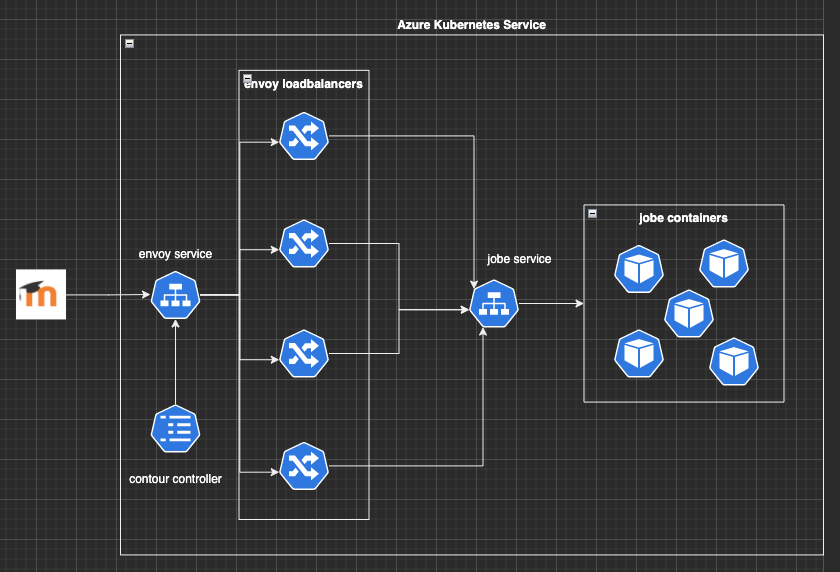

Here is a schematic figure of the setup developed by our IT department:

The JobeInABox + Kubernetes setup has been in production use for over a year. It runs smoothly for questions which do not include any support or attachment files. However, questions with support or attachment files behave somewhat weirdly. Typically, when we create a new question, the first few runs produce Unexpected error:

When we try again after a while (from few minutes to few hours), the tests run fine. After this, all runs complete successfully also for students. However, the same error may occur also later on, somewhat randomly. And we do have some pathological cases, which always return the same error (these have as many as five support files).

We have read the previous very useful discussions on Jobe load balancing back in 2016. There Richard mentioned that while individual tests can run on different Jobe servers, one single test should run inside one Jobe server. I wonder if this is still true today? In our Kubernetes setup, all Jobe containers share the same file cache directory (/home/jobe/files). But clearly we might be missing some crucial detail here as we get these errors that we did not see with a single Jobe server. Debugging the issue has been tough, as the error usually disappears after few retries.

If anyone has any ideas or advice related to a JobeInABox+Kubernetes setup, we would be very interested in hearing your thoughts!

Best wishes,

Antti Karttunen