Hello Richard & ALL,

I'm playing with larger number of rows (500K) in a table in a .db SQLite files, and, naturally, larger support file sizes. In this instance the .db file size is 97.5 MB which gets compressed to 21.2 MB in a .zip file. Then, the template is customised to extract the .zip file.

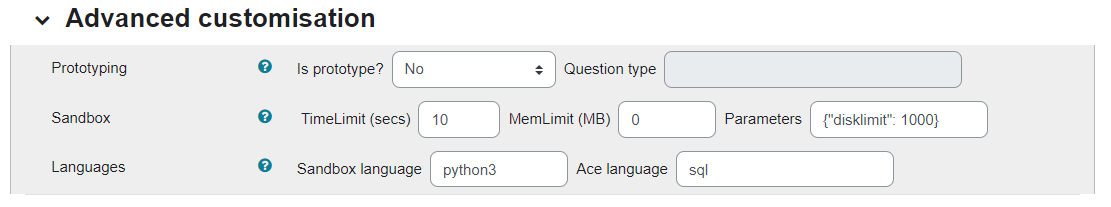

Then I set these advanced customisation options:

So far so good — the question runs OK, but it seems these advanced customisations don't have much effect on the Jobe running environment, because I print these lines in the customised Python template:

Available memory: 15.63 Gib

Total disk space: 47 GiB

Used disk space: 14 GiB

Free disk space: 30 GiB

Question files:

prog.in: 0.0MB

nyc311_500K.15C.db.zip: 21.287MB

prog.err: 0.0MB

nyc311_500K.15C.db: 97.586MB

prog.out: 0.0MB

__tester__.python3: 0.003MB

commands: 0.0MB

prog.cmd: 0.0MB

nyc311_500K.15C: 97.586MB

Total: 216.462MB

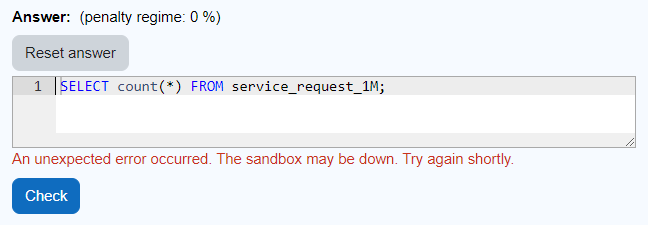

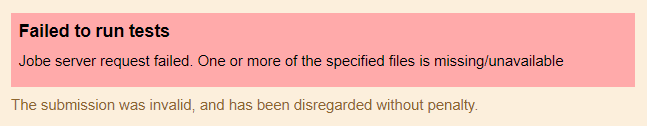

So, my question is this: if I were to use a larger file, say, twice a big (1M rows) which is 195 MB uncompressed and 41.8 MB compressed, then, pretty much no matter what advanced customisation options I use, the question always crashes, resulting in this error message:

Where do you think it may fail, even though requiring about 500 MB on disk shouldn't be a big deal it seems?

What else can I try to work this out?

Kind regards,

Constantine