Hi,

I am trying to author some questions that use random data in a file - the students program then reads the data and outputs results based on that data (it's actually stage one of an assembler). I am familiar with the discussions elsewhere regarding David Bowes and his fork of CodeRunner. However, I am looking for something a little more simplistic and lightweight.

I got very, very close to getting this working just using a customized template. The template generates the file containing the random data and a variable containing the expected output from the program. Where I am stuck is getting the grader to use the contents of the variable in place of the TEST.expected or TESTCASES[n].expected attribute. I have tried updating the TESTCASES[n].expected attribute inside of the template (not trivial, but possible; n seems almost random. I have two tests for which n=2 and n=7 ???), however the grader ignores the change.

I could create a custom grader, but that seems a lot more work. Where can I access the default implementation of the exact match grader, to use as a basis? Or is there a way of changing the expected output inside of the custom template?

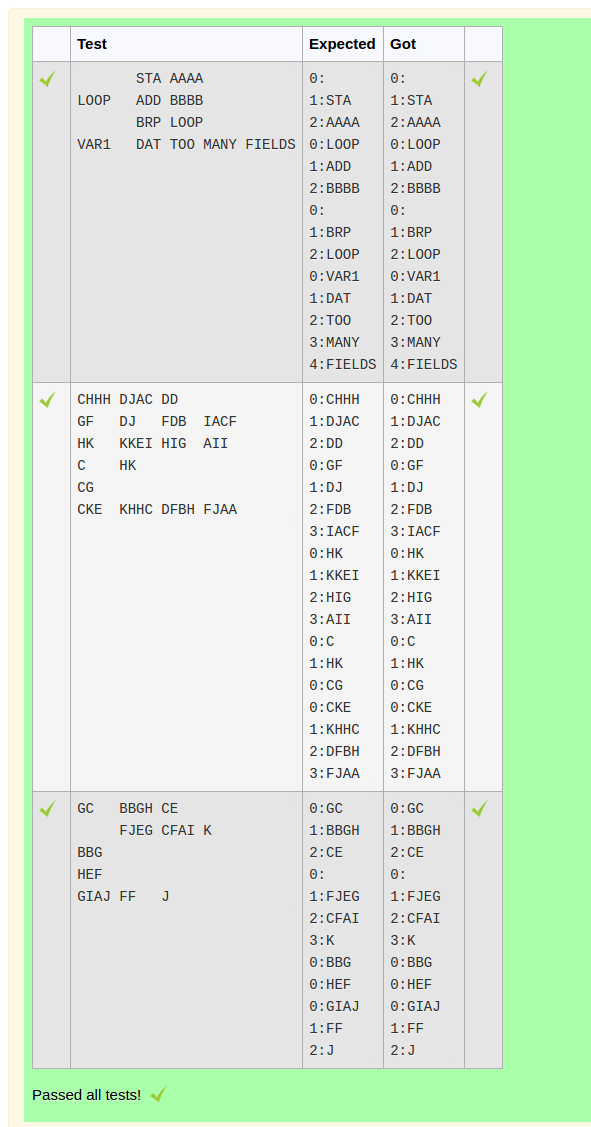

I've posted the question below. The first test is with known static data. The second is the random based one.